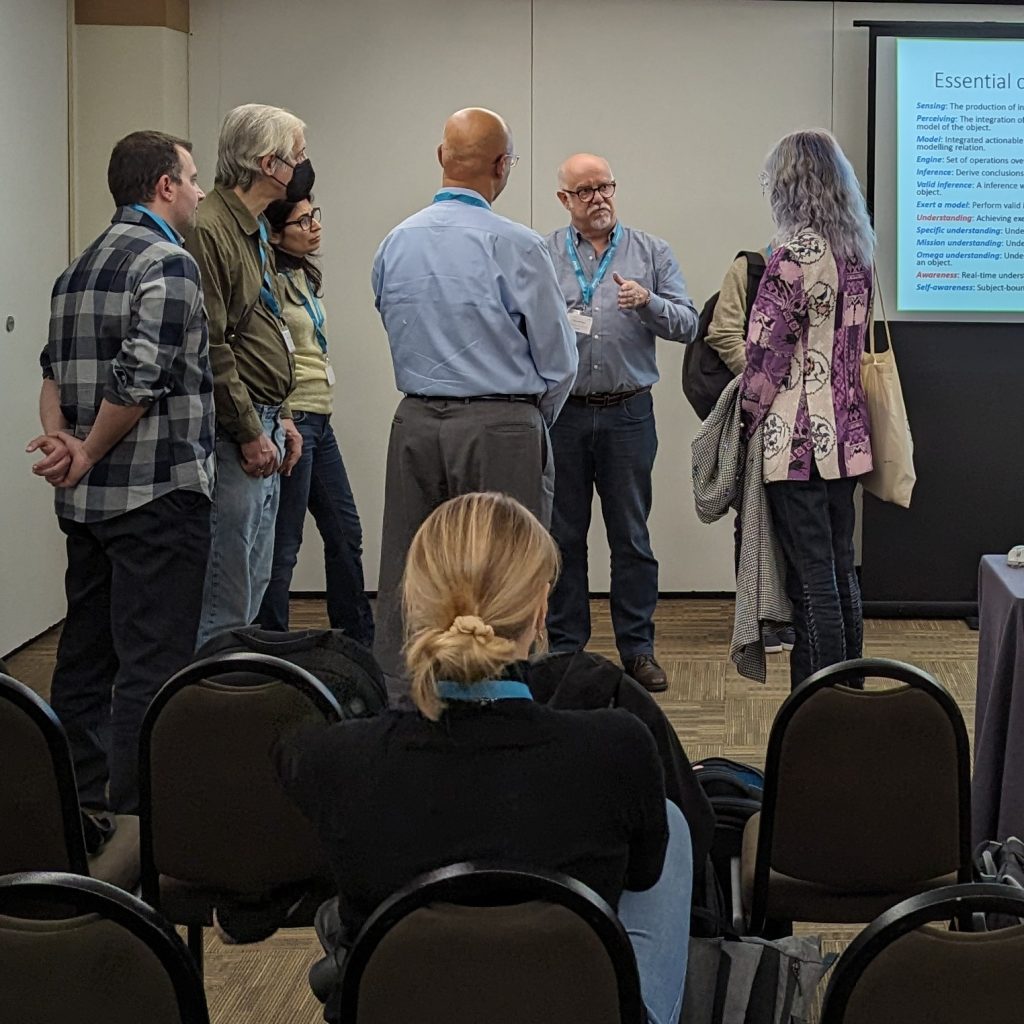

At the ICAART 2024 Conference in Rome we had a Special Session organized by Luc Steels on the Awareness Inside topic funded by the EIC Pathfinder programme.

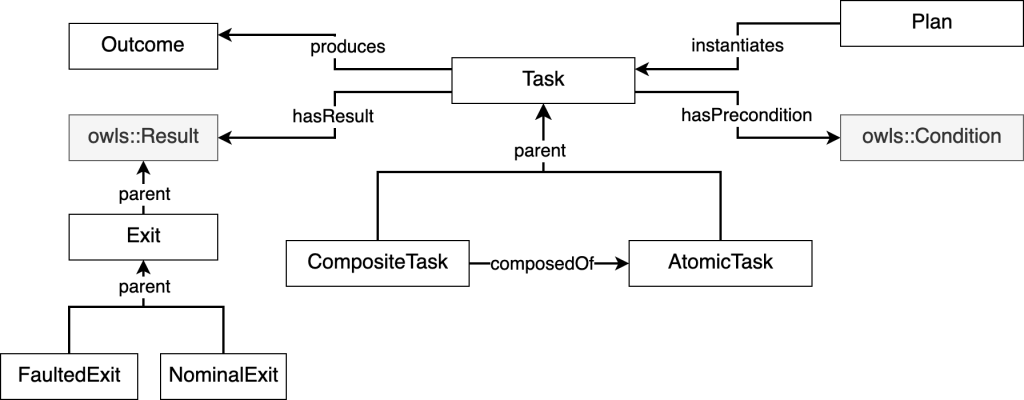

In the session I presented the initial developments towards a theory of awareness that the ASLab team is doing inside the CORESENSE and METATOOL projects.

We did a short, ten minute presentation, and after that we had an interesting conversation around the ideas presented and the possibility of developing a complete model-based theory of aware agents.

The presentation addressed the possibility of having a solid theory of awareness both for humans and machines and what are the elements that such a theory should have. We talked about two of these elements: the clear delimitation of a domain of phenomena, and the essential concepts that are the cornerstones of the theory.

These were the concepts that we proposed/discussed:

- Sensing: The production of information for the subject from an object.

- Perceiving: The integration of the sensory information bound to an object into a model of the object.

- Model: Integrated actionable representation; an information structure that sustains a modelling relation.

- Engine: Set of operations over a model.

- Inference: Derive conclusions from the model. Apply engines to model.

- Valid inference: A inference whose result matches the phenomenon at the modelled object.

- Exert a model: Perform valid inferences from the model.

- Understanding: Achieving exertability of a model of the object/environment.

- Specific understanding: Understanding concerning a specific set of exertions.

- Mission understanding: Understanding concerning a set of mission-bound exertions.

- Omega understanding: Understanding all possible exertions of a model in relation to an object.

- Awareness: Real-time understanding of sensory flows.

- Self-awareness: Subject-bound awareness. Awareness concerning inner perception.

Get the complete presentation:

And the paper: