The HUMANOBS Project

Humanoids that Learn Socio-Communicative Skills by Observation

2009-2012 / EU FP7 231453

Human communicative skills rely on a complex mixture of social and spatio-temporal perception-action processes. Autonomous virtual agents that interact with people could benefit greatly from socio-communicative skills; endowing them with such skills, however, could mean decades of person-years in manual programming. Enabling the agents to learn these skills is an alternative solution the learning mechanisms for this, however, was also well beyond the state of the art.

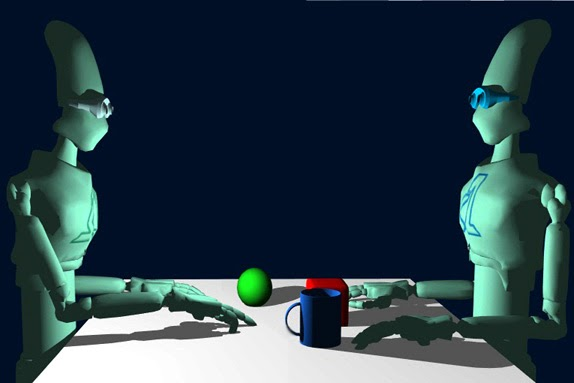

Our goal in HUMANOBS was to develop new cognitive architectural principles to allow intelligent agents to learn socio-communicative skills by observing and imitating people in dynamic social situations on the job. Skill development, in our approach, is a fundamental architectural feature: Learning happens through unique architectural constructs specified by developers, coupled with the ability of the architecture to automatically reconfigure itself to accommodate new skills through observation.

As an appropriate and challenging demonstration the resulting cognitive architecture was used to control a virtual humanoid television host, capable of taking interviews with users and conducting a 10-minute TV program. By observing and imitating humans the TV-host was able to acquire increasingly complex socio-communicative skills; by the end of the project it had reached socio-communicative skills that were low but nevertheless comparable to an average human television show host.

While we initially targeted only socio-communicative skills, we developed the system architecture and imitation learning process in a generic way, so that the principles developed could be applied to other equally complicated tasks.

Within the framework of this project the learning process was supervised by humans; however, our long-term goal is to provide physical agents with full autonomy for learning such multimodal skills in dynamic social and physical situations.

Reference

Autonomous Acquisition of Natural Language. Eric Nivel, Kristinn R. Thórisson, Bas R. Steunebrink, Haris Dindo, Giovanni Pezzulo, Manuel Rodriguez, Carlos Hernandez, Dimitri Ognibene, Jürgen Schmidhuber, Ricardo Sanz, Helgi P. Helgason, Antonio Chella and Gudberg K. Jonsson Proceedings of IADIS International Conference on Intelligent Systems & Agents. Lisbon, Portugal, July 15-17, 2014, 58-66.